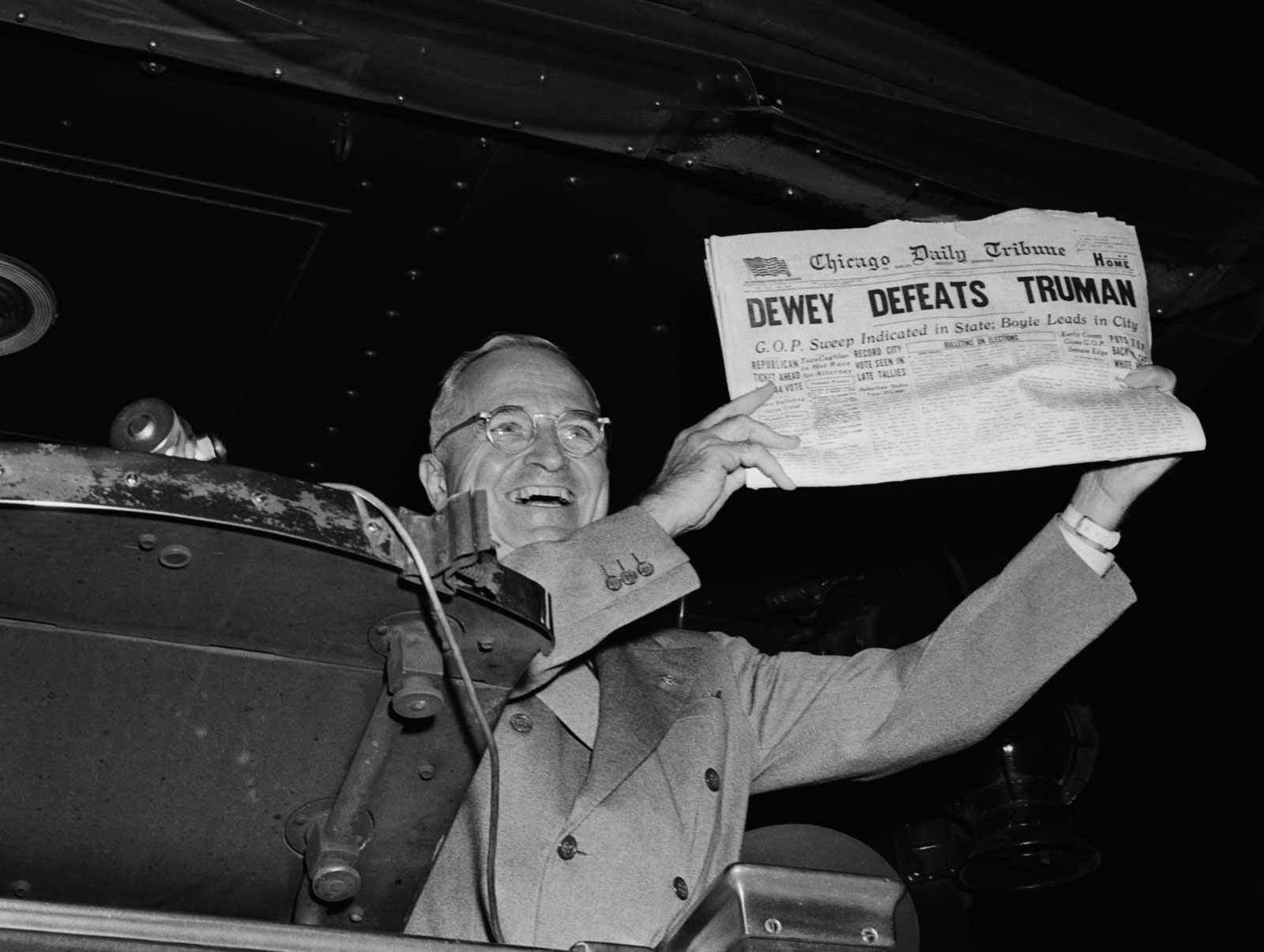

November 2, Election Day, 1948. Despite the best efforts of then-President Harry Truman, all major polls had long called the race for his challenger, New York Gov. Thomas Dewey. Hobbled by a printer’s strike, the Chicago Daily Tribune took a chance that would immortalize their paper in the annals of American politics, printing the morning edition before the results came in, plastering in large bold lettering on their front page the words: “Dewey Defeats Truman.”

The rest, of course, is history. Truman went on to win that election and pose with the erroneous headline two days later for a photograph that, to this very day, succinctly conveys the feeling of elation that comes only by proving other people wrong. On election night, Truman not only triumphed over Dewey and the Tribune, but also over a polling industry in its infancy.

Experts disagree over what exactly had caused the 1948 election polls to be misguided. Some, like professional pollster George Gallup Jr., point to the fact that little to no polling had been done in the final weeks of the election under the assumption that developments so late in the race wouldn’t affect the outcome. Others, like pollster Burns Roper, suggested that because polling had come into being under President Roosevelt, it was untested in an era without Roosevelt himself polarizing the national debate. According to Warren Mitofsky, a pioneer of modern telephone polling methods, “Political polling was ‘non-probability,’ and for a number of years they got away with it. In 1948, they got burned.”This reliance on ‘non-probability’ polling meant that poll numbers were, at the time, taken at face value, without proper interpretation as to the uncertainty of using samples to predict the opinions of the population at large. Whether of not this lack of statistical interpretation was decisive, what was clear in 1948 was that the failure of the media to accurately predict the election fell squarely on the shoulders of pollsters.

As a result of their ineptitude, the major polling firms of the time paid the price in clients: 30 newspapers would cancel their subscriptions to Gallup’s polling service in the aftermath of the 1948 election, and the fight to get them back on board would lead to vast improvements in methodology and accuracy that underpin the polling industry as it exists today.

The legion of parallels between 2016 and 1948 are not lost onto the commentators of our age. The New York Times has called the 2016 election “A Dewey Defeats Truman Lesson for the Digital Age”, noting that “All the dazzling technology, the big data and the sophisticated modeling that American newsrooms bring to the fundamentally human endeavor of presidential politics could not save American journalism from yet again being behind the story.”

Despite the glaring similarities, the upset of 2016 cannot be adequately explained as a failure of polling akin to those in 1948. After all, 68 years of statistical and probability theory on how to go from naive polling to accurate predictions separates the two elections. Stopping polling three weeks before the election, having a dearth of past election data, and whatever other “non-probabilistic” methods that plagued the polling of the 1948 election are considered unacceptable for even the most rudimentary modern pollsters.

In 1948, the confidence interval, a mathematical tool that quantifies the uncertainty of a polling estimate, was a nascent mathematical concept, conceived only 11 years prior. Today, it underwrites even the most insignificant poll’s methodology. The failure of polls to predict the result of the 2016 election in the modern day cannot be explained away by the argument of “rookie mistakes” the way that the election of 1948 could.

If any problems existed in statistical models for the election, their subtlety cannot be compared to the glaring comedy of errors that occurred in 1948. In fact, the polling model of one of the most prominent pollsters, FiveThirtyEight, gave Trump a 29% chance to win the election, putting his victory firmly in the realm of things that could realistically occur. The website’s model for the electoral college was based on 20,000 simulations of possible election results, conducted by assigning the probability of each candidate winning a given state a distribution based on the polling data that was available at the time. In 29% of those simulations, 5,800 of them, their model showed Trump taking the contest. Many of those simulations may even have predicted the precise Electoral College map that occured on election night.

The problem of 2016 is different than the problem of 1948 in the sense that the major error was the media interpretation of probabilistic predictions, rather than the predictions themselves. Nate Silver, the leading statistician of FiveThirtyEight, has written at length about this problem, stating that “the reporting was much more certain of Clinton’s chances than it should have been based on the polls.”

When media outlets are presented with quantitative evidence, they often sacrifice presenting the uncertainty present in that evidence in an accurate way for the sake of a succinct and provocative headline. This phenomenon is often evident in reporting on scientific studies, where conclusions like “Glass of Red Wine Equivalent to Hour of Gym Time” are parroted without any mention of the experiment’s methodology.The same mistakes with regards to 2016 polling data created a media landscape that drastically overestimated the chances of Clinton winning.

News outlets have rigid journalistic standards for grammar, profanity, sourcing, and a great deal of other aspects of reporting, so why has journalism not collectively adopted standards for the interpretation of statistical information? There are many simple steps that news organizations could take to improve the quality of data-driven journalism, like reporting averages of recent polls as a rule, instead of reporting the results of individual polls.

When media institutions create an air of certainty over a probabilistic prediction and are incorrect, it undercuts the credibility of the media in an era where its opponents look for every opportunity to undermine its influence. The sixty-plus times our President has bragged about his electoral upset have also been attacks on the media for its failure to accurately convey his chance of winning.

Some may argue that the media should not be blamed for taking polling data at face value. After all, what purpose do polls serve if they cannot give answers to questions of interest? Unfortunately, unless society at large accepts the non-rigorous methods that the media currently employs when analyzing questions without any quantitative evidence, the data that pollsters and statistics provide are the best window to the truth. With the benefit of historical perspective, we might forgive the 1948 journalists for misinterpreting nascent forms of polling, but journalists in 2016 no longer have that luxury. We can do better. We need to do better.

Featured Image Source: https://i.imgur.com/ofRAI3A.jpg

Be First to Comment